A Founder's Guide: Startups as Hypotheses

Written March, 2021. Published June, 2021.

Objective

This document describes a framework for developing startups, in which the core business development is treated as a hypothesis that is subjected to a series of experiments designed to maximize the amount of confidence we have in that hypothesis. This document aims to provide a unifying philosophical grounding/justification for how we make business and strategy decisions while in early stages (pre-product market fit). This document is not intended to be a how-to guide. Rather, it is a form of early values adoption.

Scientific Method

It’s hard to know what to do next.

Starting a company has a lot of variables, and almost all common failure modes essentially boil down to not having enough information or prioritizing the wrong information. Being rigorous and disciplined about company strategy is really important, but it can be hard to reason about what the optimal strategy should be.

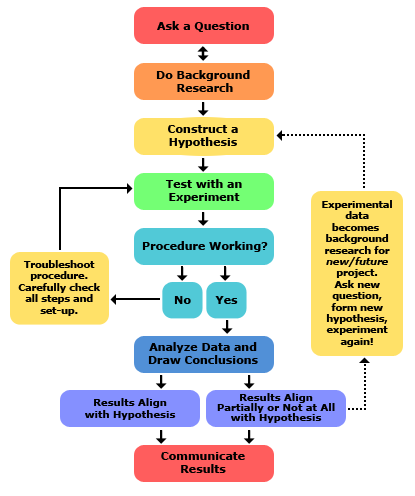

People have all sorts of fun metaphors that help them reason about startups. Things like ‘startups are a bet on the future paid with time and effort’ or ‘early stage startups are like hunting in prehistoric times’ or something else. My personal favorite is: ‘startups are a hypothesis’. I like this framing because it invokes the scientific method. We were all taught this in school, hopefully, but just as a recap: the core purpose of the scientific method is to eliminate biases and perform data driven iteration towards a better understanding of the world. This is done through the construction (pre-registration) of a hypothesis -- defined as a proposed explanation for an observed phenomenon. The hypothesis is then validated (or disproved) through an experiment that is specifically designed to gather expository evidence for the claim. Ideally, the experiment is designed such that the only possible explanation for the results proves or disproves the hypothesis.

Middleschool flashbacks.

Hypotheses Testing

Every company has, at its core, a hypothesis about markets -- namely, that the markets behave in a certain way X, and based on that limited information we believe Y that justifies the existence of our product. This core hypothesis can then spin out into sub-hypotheses that relate to product areas, individual products, and even product features.

A hypothesis is not valuable in itself. The information that comes out of testing a hypothesis against the world is valuable. In order to thrive, the company must validate every hypothesis in the chain using data driven experiments. And they must throw out the hypotheses that don’t align with the real world. Many startups die because they build something the market doesn’t want or need. This speaks to a lack of appropriate hypothesis testing, or an unwillingness to read the writing on the wall and potentially let go of an individual ‘creative vision’.

At the early stages of a startup, the goal is to find product market fit. I think of this as ‘create hypotheses about the world, run experiments, and discard your hypotheses until one of them proves true’. A popular phrase in the startup world is ‘fail fast and fail often’. This naturally relates to the hypothesis framework of startups. There is no value in pursuing a hypothesis for its own sake. A startup should be willing -- eager, even -- to discover evidence that the hypothesis they are pursuing is flawed, and they should do so as quickly as possible to minimize time. Fail fast. And after failing, the startup should pivot, try a new experiment, and go again. Fail often.

Failure here is a strange word, because whenever a startup has disproven a hypothesis and moved on, they have actually avoided the only failure that matters: pursuing dead ends.

Experiments

Crafting a good experiment is highly dependent on the hypothesis being tested. In general, however, a good experiment will have some or all of the following traits:

The experiment...

- is directly based on user feedback or user behavior in some way.

- has clear quantifiable metrics involved, so that it is easy to determine whether something failed or succeeded (prevents bias).

- has few possible reasons for success or failure besides the hypothesis in question.

- is repeatable with different cohorts of people.

- generates a lot of useful information about the world.

- is cheap to run.

Hypothesis Testing and Company Lifecycle

Hypothesis testing should occur at every stage of the company life cycle, with each experiment feeding into the next in the pipeline, and with each subsequent experiment becoming more expensive and requiring more commitment to run. At any point if an experiment fails, the hypothesis needs to be refined and backtracked.

This is best illustrated through an example. Jake wants to found a social media for dogs. His hypothesis is, roughly speaking, ‘People who are pet owners love their dogs, and love to meet other people who love dogs, so there is an unmet need for a dog-based social media’.

- First he runs the most basic experiment: does he personally think this company will be successful? This is also the cheapest experiment to run, with no additional commitment. Jake asks himself, and answers in the affirmative. Cost: ten minutes, $0.

- Next, Jake sets out to determine whether people in his network who are dog owners would use this platform. He puts together a survey and asks a bunch of friends who are pet owners. They all answer in the affirmative. Cost: 1h, $0.

- Jake then gets someone on upwork to generate 300 email addresses of dog owners, and sends out an email campaign to get further validation. For each person who responds, he does a one hour interview to get a sense of their market need for this product. Cost: 10h, $50-100.

- Jake is pretty confident of the external market need now, so he starts building a product. This may require expertise outside his current skillset, so he may have to hire someone to build a rough prototype. The prototype itself consists of many sub-hypotheses, including specific features and designs. He puts the prototype on the web and measures user engagement through surveys and trackers. Cost: 30h, $1000.

- Users don’t seem that engaged -- it turns out that even though they said they like meeting dog owners, in practice the messaging/IM features get no traction. Instead, users engage heavily with pictures of dogs. Jake formulates a new product hypothesis -- a very minimal ‘stream pictures of uploaded dogs only’ -- and builds and releases a new prototype. Cost: 30h, $1000.

- Users really enjoy this product. Feedback indicates demand for a cat specific spin-off. In order to run this experiment, Jake needs to scale the company up. He hires a full team to continue adding new features and maintain infrastructure. Cost: fulltime, $100k.

Etc. etc. It is easy to see how these individual experiments allow Jake to battle test what he is working on, to fail fast and fail often, and to make calculated proximal hypotheses based on information. At each step, if Jake got a negative result, he could go to the step previous and tweak the hypothesis with the new information about the world that he discovered. With a significant amount of repetition, Jake will eventually hit on something that makes sense.

In general, at each step the goal is to test something highly specific and get meaningful feedback on whether or not something works or fails. We should try to do the least amount of work to fail -- ask yourself, ask your team, ask your users (if you have users to burn), ask the market (if this is available/accessible), and then build.

Tangent: Experiments and Raising, Hiring

Startups often wonder when to raise funds. As evidenced above, experiments rarely have the same cost. Testing a new feature-hypothesis will be significantly less expensive than testing a new product-hypothesis. Experiments farther down the line will require additional funding. Eventually, the next obvious experiment in the chain will cost more than the resources available. This is when to raise.

Startups often wonder when to hire. The answer is the same. When you have an experiment that you want to run, but don’t have the manpower or skills to run it, you hire.

In practice you probably need to plan at least 6mo in advance for both of these, but if your experiments are good and following a trend line you should be able to predict when you need these resources before you need them.