Responding to Ted Chiang

Written February, 2023.

It's fun that everyone is getting into AI now. I get to feel a little of what doctors might feel when their relatives talk about vaccines or something.

Two days ago a good friend of mine sent me a New Yorker article by Ted Chiang, discussing ChatGPT. Ted is an excellent SciFi writer -- the man has won approximately a billion awards, and one of his short stories became the basis for the movie Arrival. I highly recommend you read his work, he's great at conveying complex ideas and has an excellent grasp of visual language.

But, you know, I don't think that makes a person particularly qualified to talk about the current state of AI/ML. Kinda like asking Matt Damon about how to create a self-sustaining Mars colony.

Pictured: someone deeply unqualified to talk about rocket science.

Cards on the table, I think the New Yorker piece is wrong. Like, really wrong. And it's been making the rounds, racking up hundreds of thousands of views. And it's potentially harmful, in a luddite kind of way - a strange position for a scifi writer to take. So I wanted to respond, while there was still a chance that anyone cared. But if you haven't read his piece, go read that first so this response makes more sense.

I'll start with what I like, which is that I agree that GPT can be thought of as a compressed database. I think this is an excellent intuition to have, a good model about how ML kinda works (remember, though, all models are wrong, but some are useful).

Where I disagree, in reverse order of magnitude:

- there's an undercurrent in Ted's piece that people think about things in a meaningfully different way, which I don't think is obviously correct;

- people actually explicitly are training deep models on the outputs of other deep models, it's a very popular and increasingly well funded field;

- I strongly disagree that there's no use for tools like chat gpt, to the point where I'm wondering if he's serious about that take.

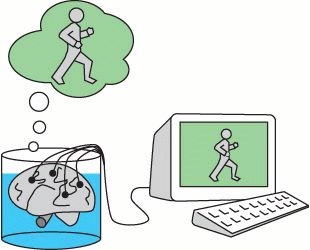

Brains in vats

Of these points, the first one is maybe the most controversial thing to push back on, but I don't think it should be. Ted points out that tools like ChatGPT don't know how to carry the 1, even though they've seen some text to that effect.

That may be true, but I'm afraid Ted may be humanizing GPT more than he should. GPT is as pure a tabula rasa as you can get. It doesn't get any evolutionary neural structures that give humans a huge leg up in our world. Worse, it only has a single sensory organ: reading text. By comparison, babies, from the moment they are born, have five continuous streams of data to parse. A month-old baby has terabytes of data to work off. Seen from this context, the whole conversation about carrying the one feels silly -- it's a wildly isolated demand for rigor. If you had a deaf blind mute touchless brain in a vat with no prior understanding of our universe, whose only ability to make sense of the world was through whatever text you had lying around, it would be a miracle if that brain could begin to understand concepts like 'objects can't occupy the same physical space at the same time' or 'gravity is a thing that exists' or any other 'obvious' rules of our universe -- things that a baby gets for free just from crawling arond.

Also GPT: what's a captain? and a millenium? and a ship? where are we? what does it mean to be in a place? what is a place? what does it mean to be?

But GPT knows these things.

You can ask GPT a question that demands some knowledge of physics, like 'if I put the mug on the table where is the mug', and it's pretty amazing that it responds 'on the table' instead of 'on the floor'. I think you would be very hard pressed to find any document on the web that says something to the effect of 'in the real world, objects don't phase through each other'. GPT just...picked this up, from how all of the input text talks about the world and how things in the world interact. So if that's also 'just pattern matching', I think that humans basically learn the exact same way, i.e. 'learning general principles by extrapolation'. (As an aside, Ted has perhaps forgotten how most people learn addition. My 5yo nephew's homework is basically just constant repetition of two digit addition, over and over and over.)

GPT as an argument for solipsism.

Teachers and Students

I'm going to quote Ted directly here, because I think he makes a solid point in my favor.

Indeed, a useful criterion for gauging a large language model’s quality

might be the willingness of a company to use the text that it generates

as training material for a new model. If the output of ChatGPT isn’t

good enough for GPT-4, we might take that as an indicator that it’s not

good enough for us, either. Conversely, if a model starts generating

text so good that it can be used to train new models, then that should

give us confidence in the quality of that text. (I suspect that such an

outcome would require a major breakthrough in the techniques used to

build these models.) If and when we start seeing models producing

output that’s as good as their input, then the analogy of lossy

compression will no longer be applicable.I agree.

So here's five synthetic nlp papers. Here's a whitepaper arguing that models trained off GPT3 synthetic data are BETTER than models trained off real world data. Here's a random tutorial about how to use GPT3 outputs to finetune GPT3. Mostly.AI raised $31M to generate synthetic data from text. And if we expand our view even slightly, and take a peek at the computer vision world, here's three more companies that have collectively raised near $150M to train models based on synthetic image data. Meanwhile, there are active plans to train the next versions of Stable Diffusion on synthetic data (a popular topic in the LAION discord), and researchers have started pulling together the requiste datasets. I don't think this is a big secret -- even Forbes has caught on.

Of the points Ted makes in the New Yorker article, this was the one I was most disappointed by. There's a lot of information out there about people doing this exact thing. Is this sufficient to change his mental model about where we are, technologically?

It's About the Process

Ted closes with a rather strong claim, which is that ChatGPT is effectively useless for both creative and regular writing. Along the way, he gestures towards the teaching process -- that students who rely on ChatGPT to learn will be meaningfully worse at the process of writing.

I think there's a lot of possible responses here. I could point out that many authors believe something along the lines of 'Good authors borrow, great authors steal'. I could mention that most writing is emphatically not creative writing, and GPT will wholesale replace jobs like data entry, copy writing, even work like news bulletins a la associated press. I could point out that GPT will likely be integrated as a very powerful auto complete, which seems perfectly in line with existing tools. I could even gesture towards how in previous eras, well-meaning folks would wring hands about the corrupting influence of new fangled tools like 'writing', and how wrong they turned out to be.

"...this discovery of yours will create forgetfulness in the

learners' souls, because they will not use their memories; they will

trust to the external written characters and not remember of

themselves. The specific which you have discovered is an aid not to

memory, but to reminiscence, and you give your disciples not truth,

but only the semblance of truth; they will be hearers of many things

and will have learned nothing; they will appear to be omniscient and

will generally know nothing; they will be tiresome company, having

the show of wisdom without the reality."

--

Socrates, on, uh, writing things down.

But the response I *want* to give, the one that I believe deep in my gut, is that the best writers (and the best artists, and the best programmers, and ...) are those who bring these AI tools into their process. Thanks to the work I do, I get to bring AI design tools to artists, and every day I watch people 100x their rate of productivity. Those people iterate so much faster than everyone else, that there is simply no meaningful competition. And much in the same way we have a generation of 'digital natives', so I think we will have 'AI natives' -- children who learn, through immersion, how to utilize these tools to give themselves superhuman capabilities. And it'll be awesome to see.

All models are wrong...

Ted started out with a good idea. His 'lossy compression' model of Machine Learning is useful, and provides some good intuition about what these things are like. But he pushed this idea too far, and the model broke at the seams. Yes, ChatGPT is in some senses like a blurry JPG print. But it's actually a tangle of linear algebra, and any metaphor that attempts to describe parts of it will only succeed at describing, well, parts of it. In the meantime, I hope Ted takes the initiative to try and integrate GPT tools into his workflow. I suspect he may be pleasantly surprised at the results.

O, and obligatory, relevant disclaimer: parts of this article were written by GPT.